As I sit down to write about my news digest feature for this website, I'm struck by the realization that we're living through a war – not one solely fought with bombs and other weapons of destruction, but with information. It's a battle for truth, where truth is used as the weapon itself and where honest reporting is often lost in a sea of misinformation and intentional confusion spread by those seeking to manipulate public opinion.

I've come to understand just how pervasive and destructive this type of warfare, also called information warfare in a military setting, has been in our modern media landscape. While it's natural to feel hopeless in the face of such disinformation and tactics, actually it's by design, I believe there's hope on the horizon. This hope lies in our ability to master the technologies that distributes information – whether through traditional or alternative sources.

I, very faintly, remember a time when the internet wasn't as ubiquitous as it is now. But instead of romanticizing the past, I'd rather focus on harnessing these technologies to make sense of the world around us. My goal with this news digest feature is to provide an overview of both mainstream and alternative news, while avoiding getting swept up in what I call the 'media currents'.

Just like strong currents at sea can be treacherous and even deadly, we need to be cautious when interacting with information irrespective of it's source. This isn't just a matter of emotional toll; it also takes a physical one. If there's something to this whole information warfare strategy having been deployed on the western countries, then we would know by registering within ourselves how we feel and how high or low our morale is after having engaged with a piece of content. I personally haven't met anyone who haven't been subjected to these very careful warfare information campaigns.

As Bob Dylan so aptly captured in his song: "The times are changing,” our information landscape is indeed evolving. Traditional media has been a cornerstone for centuries, with formats shifting over time. We've transitioned from the pioneering Gutenberg press to printed newspapers, which remained a dominant driver of public perception well into the 20th century.

As technology advanced, media adapted. Radio and television became ubiquitous in households, television, however, experienced a stunted growth due to the war efforts at the time, prompting newspapers to evolve once again. This marked a significant turning point, as the masses gained access to diverse sources of information. By World War II, people could stay informed about global events through radio reports, such as president Roosevelt's fireside chats, or catch up on the latest news at the cinema with heroic moving pictures of young men given their lives for to save their country from tyranny. How so much has changed, yet the same patterns keep reappearing time and time again.

Nearing the end of 2022, Elon Musk acquired Twitter, rebranded it as X, and gained access to a vast user base complete with a slew of bot accounts. In his words, which may be a footnote in the history books but are still significant:

You, the people, must be the media, as it is the only way for your fellow citizens to know the truth.

As someone who was present at the 2015 Krudttønden terror attack, I recall turning on the TV as soon as I returned to my apartment from the park where the incident occurred. To my surprise, it took the media around 45 minutes to an hour to report the news, which was already being discussed on Twitter by others who had also witnessed the event. In fact, eyewitnesses at the scene had reported the shooting well before traditional media outlets could verify the information.

I believe that authenticity is just as important as verification in journalism. Citizen reporting, as it's called, can be a valuable tool in the future because it provides immediate access to firsthand accounts from those directly involved. This unfiltered perspective can provide a more accurate and timely representation of events, especially during breaking news situations. These first hand accounts can help shed light on a story does not have to go through a subjective lense of editorial team who can decide, based on their own reasoning and belief system, to leave out crucial bits of information, maybe because it doesn't adhere to their own belief strcuture, or maybe because they are tapped on the shoulder and told not to bring a story under the threat of violence and repercussion.

Hype and Hyperbole: The Rise of Large Language Models

As I was brainstorming the concept of creating a news digest, large language models (LLMs) were making headlines due to the groundbreaking advancements achieved by the OpenAI team.

As with many mainstream media outlets, the more you know about a story, the more issues you tend to have with how it's reported. This is especially true when discussing artificial intelligence. The topic is often shrouded in fear and apocalyptic warnings of AI taking over jobs, reminiscent of Hollywood movies like "The Terminator" that depict AI controlling our planet. However, if you listen to the experts working on these technologies and understand the difference between large language models (LLMs) and AI, they'll paint a far more nuanced picture than what's being broadcasted by legacy media outlets.

The concept of large language models (LLMs) isn't new, but recent breakthroughs in architecture and data handling have led to unprecedented accuracy. This has prompted a thorough reexamination of LLMs' potential across all sectors of industry and business.

Large language models (LLMs) are trained on vast amounts of data to build a corpus of documents. This corpus is then iterated over and every word in every sentence is predicted upon each iteration until it can stitch together an output response to a users prompt with the highest next word predictor score. It's a statistical model in other words if you ask a mathematician, but if you ask anyone working at a startup in Sillicon Valley they would use the dressed up version of the word which is machine learning.

The legal implications are clear, but unclear is whether these products are built using allegedly public data or private sources without permission. Have authors and creators given consent for AI companies to use their works - articles, books, and more - to train LLMs? One whistleblower case that warrants attention involves a former OpenAI employee who claimed the company was breaching copyright laws while extracting data for its models. The employee's sudden death, ruled out as a suicide, has raised suspicions, but the motive is clear: OpenAI stands to gain millions if not billions of dollars by using publicly and perhaps privately sourced internet data without permission from the content owners. Watching an interview with the whistleblower's mother and staying informed about potential legal cases are crucial as the story is still unfolding as we speak and could have ramifications for the new AI companies hedging their bets on this future.

Another important aspect to take into consideration is garbage in garbage out. The quality of the training data, in other words, very much determines the quality of the responses these models can produce. This is particularly a problem with AI generated code snippets, as many developers with a wide audience has been quick to point out. But the case is just as valid and important to note for all other sectors where LLM's are being used and deployed. Also because of the lack of human generated training data, companies like OpenAI are now feeding their models with AI generated training data instead, in order to keep up the seemingly impressive improvement stats and performance figures. This is also leading to a deterioration, rather than an improvement of the models responses.

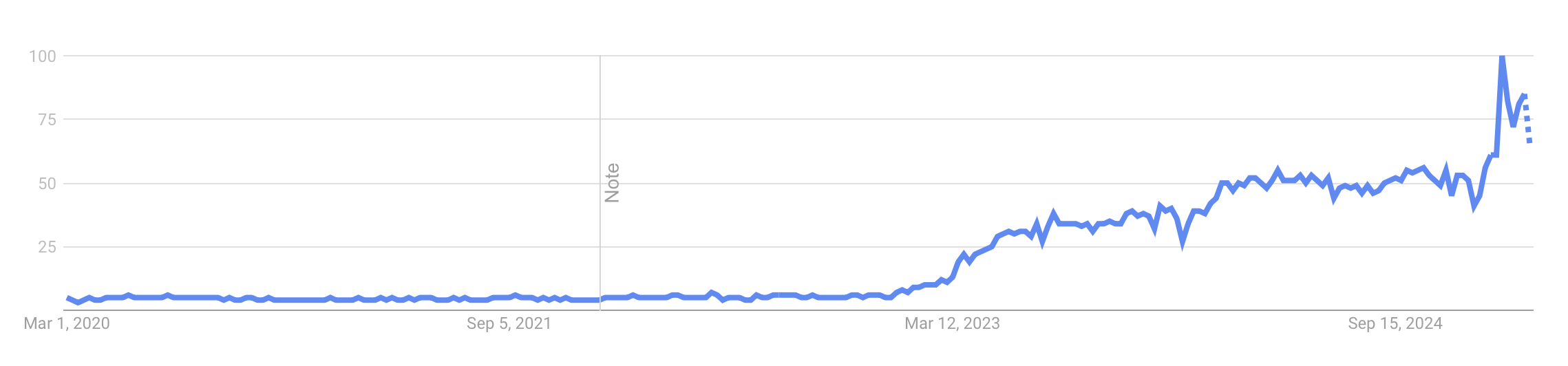

I began by trying to understand what all the hype was about with large language models (LLMs). One day, while commuting to work, I noticed a sudden spike in Google trends around April-May 2024. That's when it hit me: why not create a simple news summarizer? It seemed like a valuable feature for the newspaper I was considering reviving at the time, which had been on hold as I explored other projects and ways to engage with my audience.

From design to delivery

I recognized the need for a tool that would give readers a comprehensive view of current events, taking into account the rapid evolution of our media landscape.

I aimed to create a daily news summarizer that could run at key moments throughout the day - morning, noon, and evening. At these times, the system would gather news items from various sources, prioritize them by relevance, and provide readers with a comprehensive overview of the current news landscape, offering multiple perspectives.

What sets my news digest apart from other alternatives is its categorization of sources into 'media groups' based on shared attributes. I've identified four main categories: mainstream media, alternative media, Russian media, and spiritual media.

- Mainstream media refers to well-established outlets like the New York Times, Reuters, and Washington Post. Alternative media, on the other hand, includes any outlet or individual that offers a critical perspective not found in mainstream sources. This group often covers stories and angles that are ignored by established media.

- Alternative media, on the other hand, includes any outlet or individual that offers a critical perspective not found in mainstream sources. This group often covers stories and angles that are ignored by established media.

- The Russian media category provides a unique perspective on global events, such as the war in Ukraine. I find it refreshing to get an alternative view on complex issues like this one.

- The spiritual media group is perhaps the most intriguing. While some might dismiss this category as 'new age' or even dangerous, I believe it offers interesting conversations about various topics, including military activities and secret space programs. For personal reasons, I find this area fascinating and, if approached with caution, can be a rich source of information.

To categorize sources into distinct media groups, I'd rely on the human psychological concept of "social hierarchy detection" or "group leadership evaluation". This principle suggests that humans naturally organize themselves around leaders in a group, who help the group survive and thrive. Similarly, I believe the media landscape is organized around dominant players. In mainstream media, outlets like The New York Times and The Washington Post come to mind. For alternative media, I'd identify influential figures such as Alex Jones, whose channels consistently challenge the mainstream media's coverage and draw a large audience seeking this type of information. This is likely due to widespread dissatisfaction with mainstream media, which may stem from valid reasons.

To gather news from mainstream media outlets, I would utilize Really Simple Syndication (RSS), a technology developed by Aaron Swartz, a remarkable open-source advocate. RSS enables any website to organize its content – be it news articles or other types of information – in a standardized format, facilitating seamless communication between different domains and data structures. This standardization allows for easier access and use of the data, as it clearly defines which fields are required, optional, and what the expectations are.

Information is power. But like all power, there are those who want to keep it for themselves.

While this approach may be effective for mainstream media outlets, it wouldn't apply to alternative media groups due to a fundamental difference: not all alternative media sources have websites that support RSS feeds, unlike the standardized structure found in mainstream media.

To extract necessary information from an alternative media group, I decided to use Telegram, a messaging platform. The reason for this choice is a story worth sharing. In 2020, as the pandemic locked down our planet, I began questioning the main narrative being spun by mainstream media. Upon thorough investigation of media coverage at the time, it became clear that it fit the definition of propaganda: one-sided reporting with no airtime given to experts in their respective fields to challenge the stories being presented at such a speed and momentum that viewers barely had time to stop, think, and reflect on what was being said.

As I continually questioned the information provided by mainstream media sources about the event unfolding in 2020, I found myself seeking out alternative voices and media outlets that offered a higher level of competence and conversational quality on this topic. The gap between these reliable sources and the mainstream media's coverage grew increasingly apparent, prompting me to take a more proactive role in addressing and shedding light on some of the key issues surrounding this pivotal event.

As I continued to explore online interviews, I eventually came across a conversation with a former investment banker from Denmark who had founded a political party called JFK21. Intrigued by what I learned, I decided to take the S train to their offices one day for an open meeting they were hosting.

While there's more to the story than what I'll share here, I can summarize that my investigation ultimately revealed that this political party, led by a former investment banker turned whistleblower, is likely connected to the Danish secret service. This insight came from his own words during one of our meetings, where he mentioned that everyone who attends their gatherings is monitored by the secret service. Interestingly, the party's logo bears a resemblance to Palantir's software, which is used by the Danish police and may also be utilized by the secret service.

What struck me as intriguing was the leader's persistence at our meetings, urging attendees to sign up for and actively use the Russian-based Telegram messaging service, founded by Pavel Durov. He was adamant that we get involved on this particular platform.

On Telegram, you'll find a diverse range of perspectives offering constructive criticism and concerns about prevailing narratives, often without rigorous fact-checking or investigation. This is what many people, including myself, are seeking: reliable insights from experts who aren't afraid to challenge dominant ideas, question prevailing wisdom, and venture outside the comfort zone of groupthink that still plagues many scientists. Unfortunately, not much has changed since the days when the geocentric model held sway, with experts often pointing fingers and mocking rather than engaging in meaningful dialogue.

Upon closer examination, Telegram's Application Programming Interface (API) stood out as particularly noteworthy. As a developer, I was struck by its unique design, which provides unprecedented read/write access to data on the platform. This granular control allows for highly detailed insights into activity within specific chat groups and channels.

Considering the Telegram platform's features and the leader's enthusiasm for recruiting users, I suspect it may be an ideal tool for monitoring and tracking dissidents. The rich granular API access and lack of encryption, as revealed by a Signal developer, make it a paradise for surveillance agencies like the Danish secret service. Furthermore, the state-sponsored mainstream media in Denmark produced a three-part series called "The Shadow War," which aimed to discredit criticism against the state, notably found on Telegram, by linking it to Russian propaganda and misinformation. Given the leader's ties to Russia and the platform's Russian origins, this was an easy feat. However, for me, Telegram represented a refreshing alternative to the mainstream media, where dissenting voices were heavily censored. In contrast, Telegram allowed users like myself to freely express our opinions, making it an attractive option to facebook and twitter who at the time were engaged in censorship in collaboration with the United States to name just one state actor.

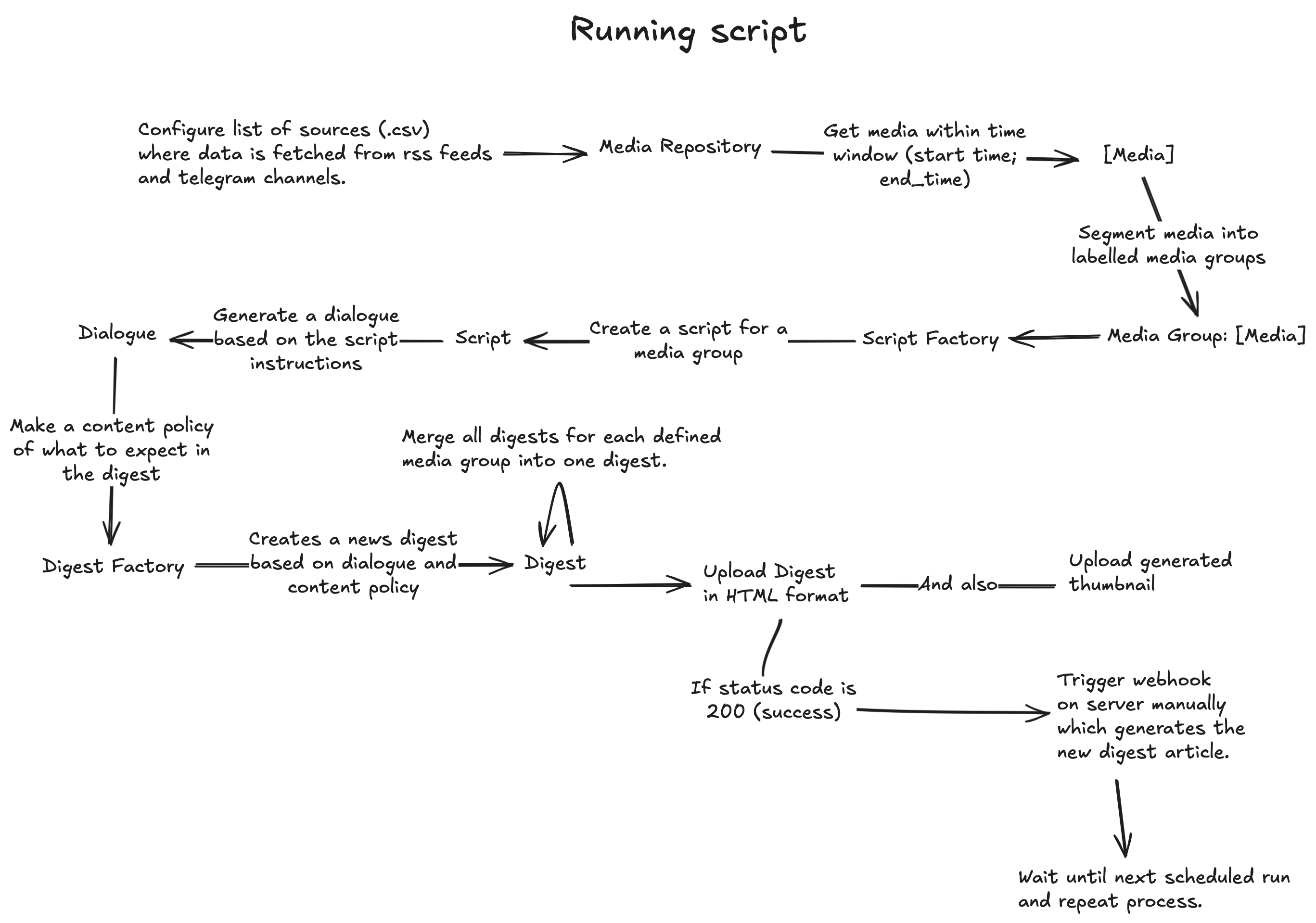

After identifying the primary sources of information - mainly mainstream media using RSS Feeds, along with alternative sources utilising Telegram, and falling back on an RSS Feed if available - I proceeded to model the news digest feature.

The whole reason for starting this project in the first place was because of the spark of interest worldwide in the possible use of LLM's. Baked into the Script as I call it is the ability to make a series of prompts, some structured and some unstructured. As you can see from the diagram of the domain model, two LLM's are currently supported: ChatGPT and the lesser known open source version from Meta called Ollama. Mind you, this portion of the code was written in the summer 2024 and since then, which was only a few months ago, the competition has become even more heated, in the LLM space, with more competitors like the Chinese Deepseek throwing their hat into the ring.

Initially I thought it would make sense to model a script, just like it's known from film world for instance, where each script which includes a dialogue complete with lines for the actors in the movies to read. Because I knew I needed multiple prompts and expected multiple responses back I thought this particular design, or facade pattern as it's called, was fitting.

Next I would fit the dialogue produced by the script into a digest which supports HTML formatting. Perhaps not known to most people is the fact that LLM's like Ollama and ChatGPT actually returns their responses in valid markdown format and so I thought of enabling the digest to produce both markdown and html format which are translatable amongst each other.

The code currently running producing the news digests on a daily basis is written in a very approachable and readable language called python. This language allows for easy prototyping and going from ideas on paper to actual code and results that can be verified and analysed. I've set up what is known as a cron job, which wakes a server at specified time intervals and runs the program. The currently scheduled runtimes are:

- Morning: 8 AM (Central European Time)

- Noon: 12 PM (Central European Time)

- Evening: 6 PM (Central European Time)

Each media group has a list of media which contains a list of populated documents with fields like title, text, date and more. To explain the above executable diagram: For each media group a script is produced which in turn generates the dialogues. These dialogues are turned into several sub-digests, one for each media group, if the digest content policy allows it. This policy specifies what it expects the dialogue to have, for instance a header with a certain identifier or one to many list of news items. The sub-digests are then merged into a single digest, which ends up being the final product send to the main monolith server which is what finalizes the process by creating an article with this content and also the produces thumbnail. Besides the actual data, performance benchmarks are also collected giving me an indicitator have much time it takes to create a digest for a given language, where I currently have language support for english and danish, seeing as they are the two main audiences for my newspaper.

With all that in mind, the news digest currently produced doesn't actually make use of any responses from LLM's, even though the implementation details clearly state that it supports such. The reason being is that I found, after many iterations of the news digest, some where the code would use LLM's, mostly the one offered by Ollama (llama3:8b) that what I was really looking for was just an easy way to paraphrase and display a list of sorted news items. The sorting method currently employed extracts the top 21 documents for each media group supported, based on an algorithm called TF-IDF (Term Frequency-Inverse Document Frequency):

This algorithm simply takes a list of candidate documents normalizes the text length such that they are equal in length, removes any common words (also called stopwords) and counts the frequencies of the most used words, or tokens as it's also called. Imagine in a list of fictional documents, the word Trump used frequently, then these documents with the most occurrences of Trump and other such frequent words amongst the corpus of documents would be elevated to the top and presented in the news digest. Upon quality evaluation, when this news digest feature has been up and running for a couple of months now, this algorithm seems to work well and finds what people in aggregate are discussing.

Other reasons why I initially abandoned using LLM's in the digest was because of key characteristic one has to consider, I've come to find, when working with LLM's:

- Performance: When I was testing LLM's both locally (such as Ollama) and API based (such as ChatGPT), the response for each prompt would range from 15 seconds to 30 seconds, where each prompt was asking the LLM in question to summarise a piece of text coming from a media document. Adding to this that I have not one, but several prompts to go through before a digest is complete then we're no longer talking about a mere 30 seconds but an accrual prompt response times that could take upwards of 30-45 minutes.

- Quality: For summaring pieces of text, LLM's are generally very good. This is what I believe to be their strong suit. Not logical analysis and thinking, but taking as input text to be summarised or turned into some shape or form.

- Context Window: If you move outside of the input context window, which dictcates the allowed length of the prompt, then you can end up with a situation where parts of your prompt is not included and altogether ignored. This can lead to issues where a structured prompt is in fact not structured at all because that part of the prompt was outside the allowed context window and your input was too large.

- Hallucinations: LLM's when you strip away all the investor hype is basically a next word predictor and sometimes the mess up and produce outputs that are not what you were looking for at all or was in fact asking for in the first place. This is hard to detect and verify with code if we're talking about unstructured prompts and would usually require a human being to verify the response somehow.

- Censorship: Both ChatGPT and Ollama made by Meta (formerly known as facebook) are Sillicon Valley companies based in the state of california, which is primarily a blue democratic state, or stronghold if you will. This means, that it is inevitable that political biases makes the way into the LLM's, which is especially an issue here which has turned me completely away from using ChatGPT for instance on content originating from the Telegram platform, because it would immediately get flagged as disinformation and the essense of the information would get lost and not properly summarised. This is less of an issue I've found with Ollama.

Adding to this the fact that my performance metrics showed that it would take

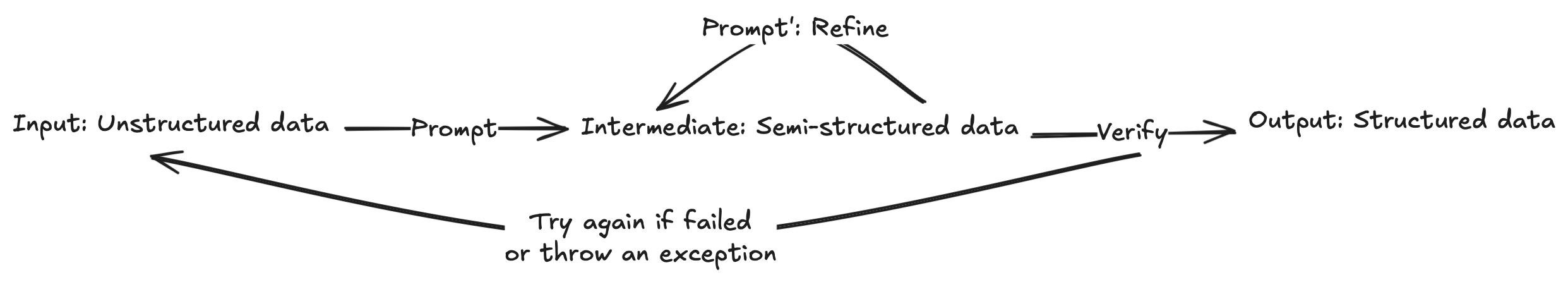

The difference between a structured prompt and an unstructured prompt is that a structured prompt, when asking one of the supported LLM's, is tasked with specifically returning the response in a given format, such as Java Script Object (JSON), which has become one of the most commonly known serializable data transport methods found in modern programming development environments due to it's wide built in support in many programming languages. Another structured format could also be XML.

Unstructured data makes up a large amount of the information found on the internet. I count myself one of those people who immediately thought of all the potentially wonderful applications if an LLM could take as input unstructured data and turning it into structured data.

To make it more clear what I mean by that is, imagine you have a document online. That document is unstructured (raw text) meaning you would have to write very specific code in order to extract the necessary details from that document. This obviosuly doesn't scale if you then have another document with a completely different structure or data representation. But, if you could throw into the mix, an LLM which could serialize the unstructured data into a specific target data structure with initial prompt requirements of what the data structured such contain of fields and their various types, then that would surely be a breakthrough.

In my experience and my domain of summarizing the news, it worked some times, but other times the structured data returned would be slightly off and not be in a correct format causing.